Memory in Language Model-Enabled Agents

Created by Yuwei SunAll posts

Language models emerge as potential planners and world models for agents in virtual environments. This post delves into the unique capabilities of LLMs for decision-making and environmental understanding within simulated worlds.

Memory of Language Models

The simplest form of memory for language models involves limiting the number of tokens it can store. If the conversation history becomes excessively long and exceeds the model's token limit, earlier messages may be truncated, potentially leading to a loss of context. Scaling up the input context length has been explored in LLM studies; for example, GPT-3 increases the input length from 1k in GPT-2 to 2k tokens. However, this approach typically results in computation-intensive training, constrained by the quadratic computation complexity of self-attention.

Language Model-Enabled Agents

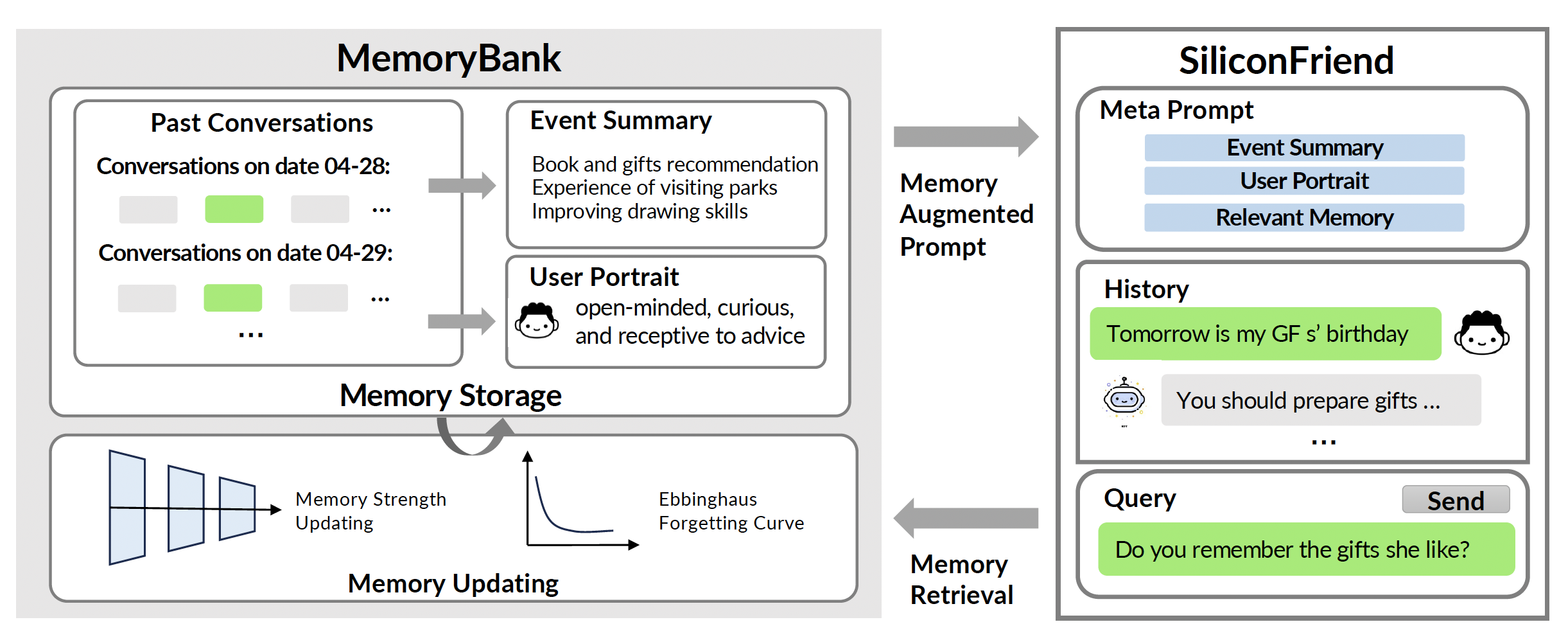

MemoryBank: Enhancing Large Language Models with Long-Term Memory [1]

MemoryBank enables the models to recall relevant memories, continually evolve through continuous memory updates, and adapt to a user’s personality over time by summarizing information from previous interactions.

- Every turn of conversations and event summaries is considered as a memory piece $m$, which is pre-encoded into a contextual representation $h_m$ using the encoder model $E(\cdot)$.

- The current context of conversation $c$ is encoded by $E(\cdot)$ into $h_c$, which serves as the query to search $M$ for the most relevant memory.

- Ebbinghaus forgetting curve uses an exponential decay model: $R=e^{-\frac{t}{S}}$. $R$ is the memory retention. $t$ is the time elapsed since learning the information. $e$ is approximately equal to 2.71828. $S$ is the memory strength, which changes based on factors such as the depth of learning and the amount of repetition.

- $S$ is modeled as a discrete value and initialized with 1 upon its first mention in a conversation. When recalled during conversations, it will persist longer in memory by increasing $S$ by 1 and reseting $t$ to 0, hence forget it with a lower probability.

Augmenting Language Models with Long-Term Memory [2]

Language Models Augmented with Long-Term Memory (LONGMEM) enables LLMs to memorize long history. A decoupled network architecture with the original backbone LLM frozen as a memory encoder and an adaptive residual side-network as a memory retriever.

There are three key components: the frozen backbone LLM, SideNet, and Cache Memory Bank:

- The embedding layer of the backbone LLM first encodes the input ${x_i}_{i=1}^{|x|}$ into embedding space and outputs the initial hidden states, $H_{\text{LLM}}^0\in \mathbb{R}^{|x|\times E}$, where $E$ is the hidden dimension.

- Each successive Transformer decoder layer of the frozen backbone LLM computes the new hidden states using the hidden states from the previous layer, $H_{\text{LLM}}^{l'}=f_{\theta_{\text{LLM}}^{l'}}(H_{\text{LLM}}^{l'-1}), \forall l'\in [1,L']$ and $L'$ is the total # layers for the backbone LLM.

- The key-value pairs used for self-attention at the $m$-th Transformer decoder layer are stored in Cached Memory Bank, which is a cached head-wise vector queue $Z_k \in \mathbb{R}^{H\times M \times d}$, $M$ is the memory bank size and $H$ is the number of attention heads.

- The SideNet module takes all current input hidden states from the backbone LLM and the past key-value pairs in Cached Memory Bank for computing memory-augmented representations.

- SideNet consists of $(L-1)$ normal Transformer decoder layers for the hidden states from the current input, and one special memory-augmented decoder layer for the top relevant key-value pairs in memory.

- The memory bank stores cached key-value pairs at the level of token chunks and divides tokens into $M/csz$ chunks. A chunk refers to an $n$-gram structure of chunk-size $csz$ number of tokens. The mean-pooled vector is used on the chunk-size dimension to get the key vector for retrieval.

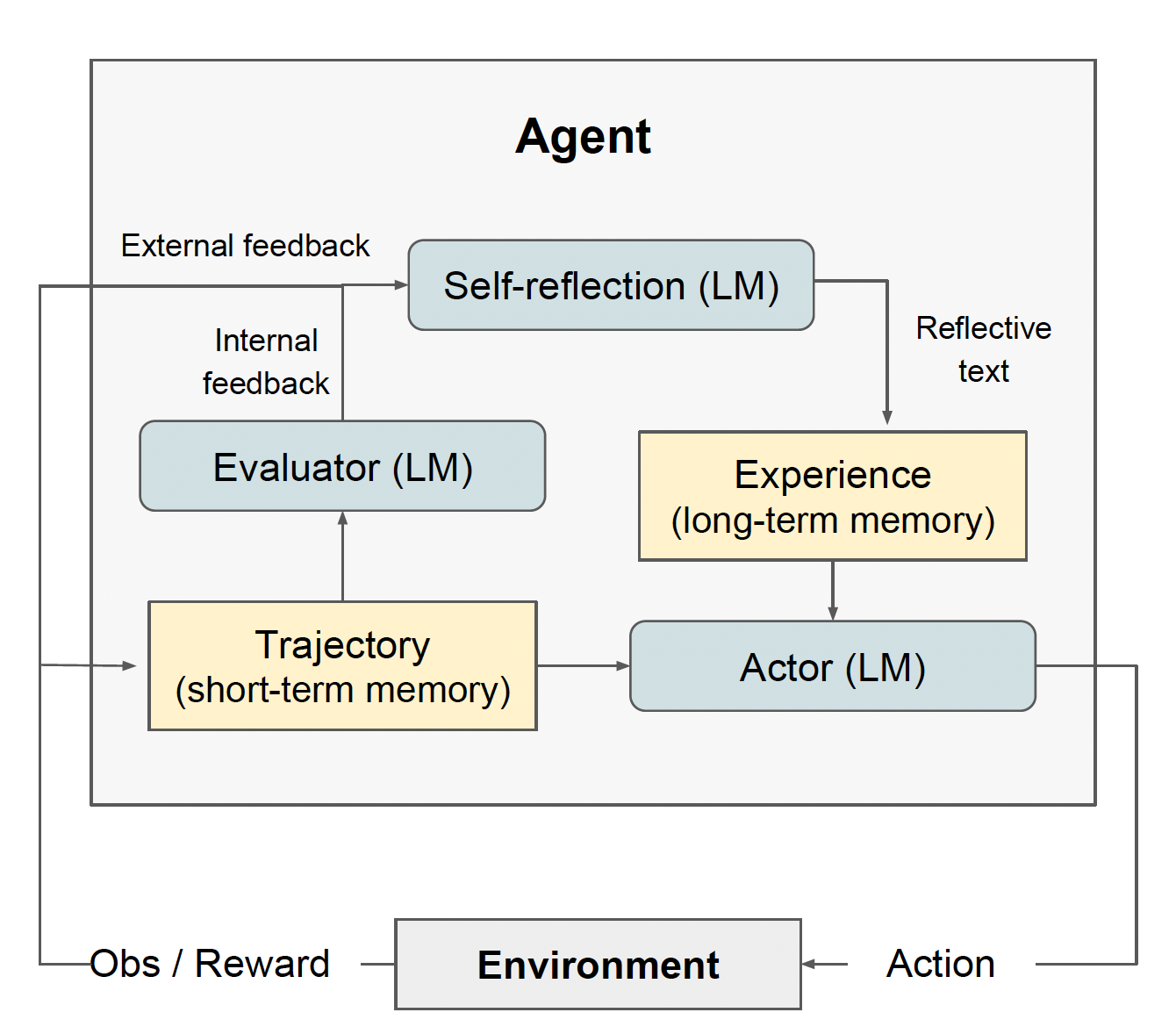

Reflexion: Language Agents with Verbal Reinforcement Learning [3]

Reflexion uses verbal reinforcement to help agents learn from prior failings. Reflexion converts binary or scalar feedback from the environment into verbal feedback in the form of a textual summary, which is then added as additional context for the LLM agent in the next episode.

- Three distinct models: an Actor $M_a$ generates text and actions; an Evaluator model $M_e$ scores the outputs produced by $M_a$; and a Self-Reflection model $M_sr$ generates verbal reinforcement cues to assist the Actor in self-improvement.

- The trajectory history serves as the short-term memory, while outputs from the Self-Reflection model are stored in long-term memory.

- The process: Actor produces a trajectory $\tau_0$ by interacting with the environment. The Evaluator then produces a score $r_0$. The Self-Reflection model analyzes the set of $\{\tau_0, r_0\}$ to produce a verbal experience feedback $sr_0$ which is stored in the memory (with an upper bound of the total experience number). The Actor, Evaluator, and Self-Reflection models work together through trials until the Evaluator deems $\tau_t$ to be correct.

- Self-reflection timings: the agent executes the same action and receives the same response for more than 3 cycles; the number of actions taken in the current environment exceeds 30 (inefficient planning).

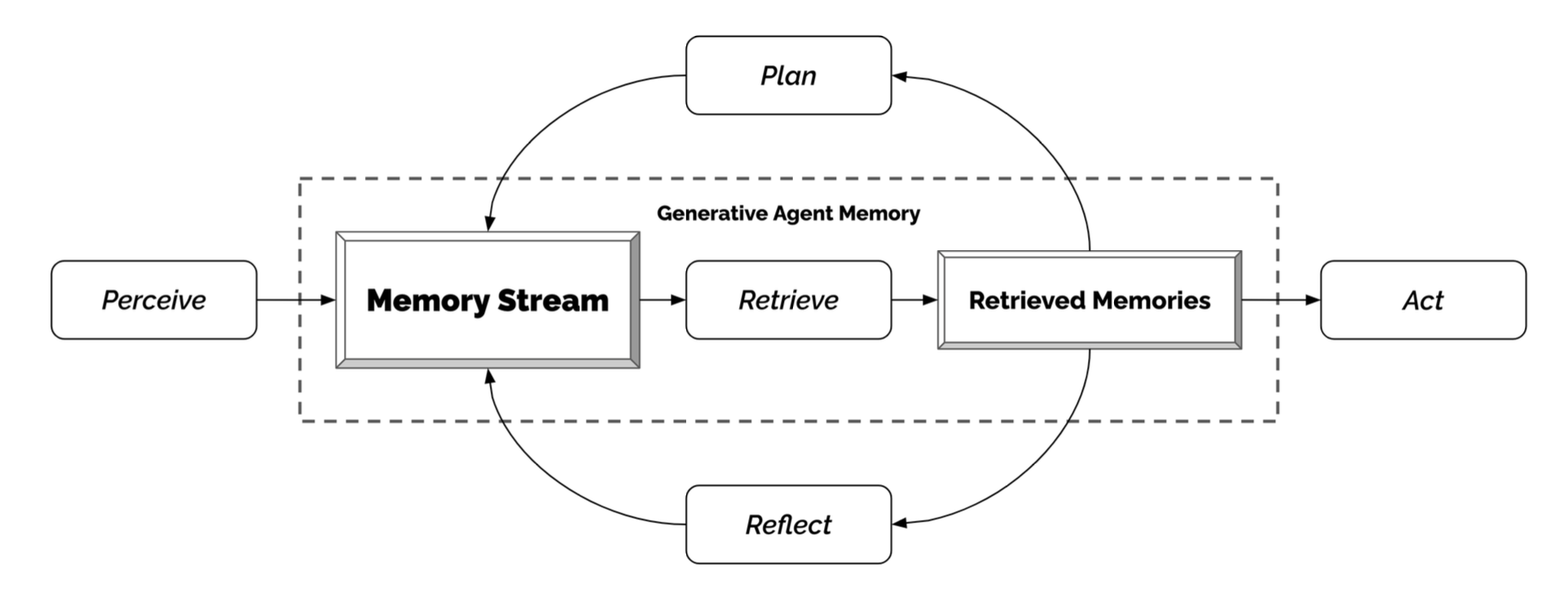

Generative agents: Interactive simulacra of human behavior [4]

This work simulates 25 agents for 2 days in a simulated sandbox environment. The agents interact with the world through their actions and engage in natural language communication with each other. Social dynamics unfold among multiple agents.

Success in this simulation necessitates an approach that can retrieve relevant events and interactions over an extended period, reflect on those memories to generalize and draw higher-level inferences, and apply that reasoning to formulate plans and reactions that make sense both in the current moment and in the longer-term trajectory of the agent's behavior.

To construct an agent, a memory stream is employed to record, in natural language, a comprehensive list of the agent's experiences. Based on their perceptions, the architecture retrieves relevant memories and utilizes those retrieved actions to determine subsequent actions. These retrieved memories also contribute to the formation of longer-term plans and the generation of higher-level reflections, both of which are incorporated into the memory stream for future reference.

Memory retrieval

There are many possible implementations of a retrieval function, depending on what is important for the agent to consider when deciding how to act. One effective approach is to directly ask the language model to output an integer score.

On the scale of 1 to 10, where 1 is purely mundane (e.g., brushing teeth, making bed) and 10 is extremely poignant (e.g., a break up, college acceptance), rate the likely poignancy of the following piece of memory.

Memory: buying groceries at The Willows Market and Pharmacy

Rating: <fill in>

Reflection

Reflections are higher-level, more abstract thoughts generated by the agent. They are included alongside other observations during retrieval. Reflections are generated periodically, roughly two or three times a day. Then, we prompt the language model to extract insights and cite the particular records that served as evidence for the insight.

Findings

First, synthesizing an increasingly larger set of memory not only posed a challenge in retrieving the most relevant pieces of information but also in determining the appropriate space to execute an action, given the increasing number of locations that the agent learned about. As a result, some agents chose less typical locations for their actions, potentially making their behavior less believable over time.

Second, erratic behaviors caused by misclassification of what is considered proper behavior, especially when the physical norms of certain locations that are hard to convey in natural language did not percolate to the agents. The instruction tuning also seemed to make the agents overly cooperative with one another.